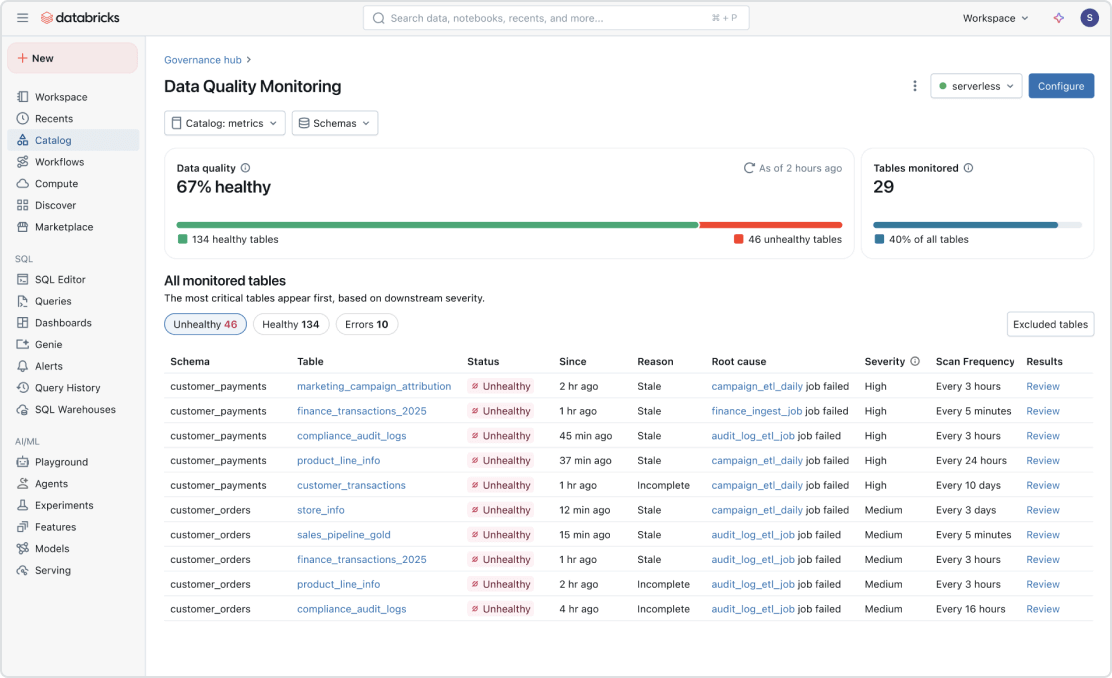

Data Quality Monitoring

Intelligent quality monitoring for data and AI on the lakehouse

What is Data Quality Monitoring?

Databricks Data Quality Monitoring allows teams to monitor the health of their datasets without added tools or complexity. Powered by Unity Catalog, Data Quality Monitoring, which includes anomaly detection and data profiling (formerly known as Lakehouse Monitoring), automatically monitors data quality metrics, statistical trends and anomalies over time. With a single, unified approach enabled by lakehouse architecture, teams can quickly diagnose issues, perform root cause analysis and maintain trust in their data and AI assets.

Data Quality Monitoring capabilities on Databricks

Anomaly detection

Enable scalable data quality monitoring with one click. Databricks automatically analyzes historical data patterns to detect anomalies in table freshness and completeness. With intelligent scanning, only your most important tables are scanned while low-impact ones are skipped. Tables are monitored as they update, ensuring insights are up to date without manual scheduling needed.

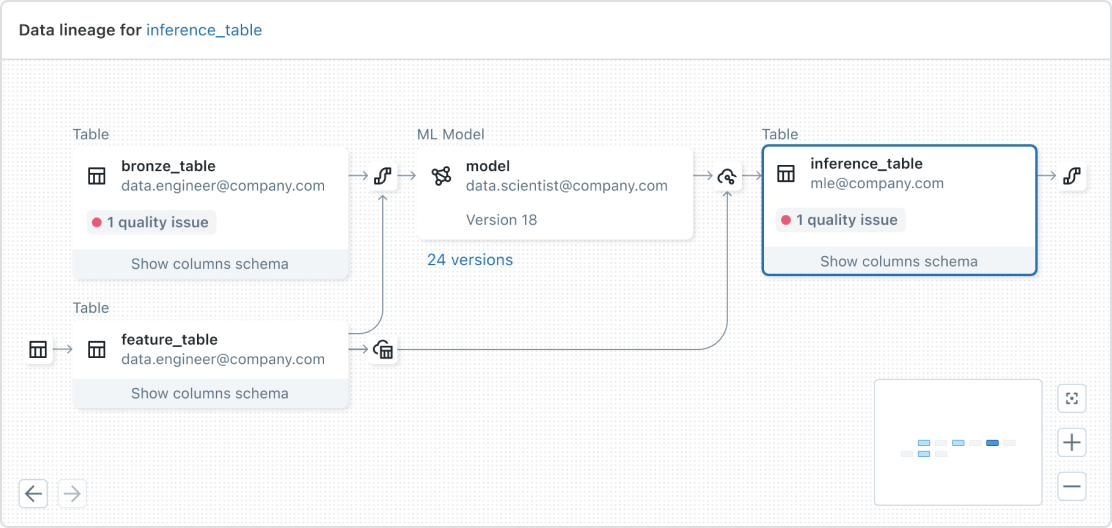

Data profiling

Receive summary statistics for your tables and track historical trends and unexpected changes across your data and ML outputs, helping teams better understand their data and reduce downstream operational toil. Extend monitoring to GenAI applications and machine learning models by profiling inference tables that capture model inputs and predictions.

Accelerated root cause analysis

Debug data and model quality issues faster by using data profiles, historical trends and anomaly signals to trace issues back to their source. This helps teams reduce time to resolution and improve the reliability of production pipelines.

Resources

Blog

eBook

Documents